miso

howto: make a cup of soup

In a previous post, I related how I discovered the power of GPT-4 to help me write an experimental web-based IDE (integrated development environment), and how this led me to question the whole “let’s make a language and an operating system and a computer” project.

In a nutshell:

If everyone’s going to be interacting with their computers through natural language, what’s the point of a programming language?

This definitely took me a little while to process. In the age of Machine Learning, does “programming” even have any meaning as a term any more?

And the answer is: yes, it does, in fact more so than ever. Far from “putting programmers out of work”, I’ve got a feeling that the Machine Learning Revolution(Tm) will actually turn more of us than ever into programmers.

Note: in this and future pieces I’ll use the term Machine Learning (“ML” and “ML systems”) in preference to Artificial Intelligence (AI) or Deep Learning or whatever. Because, reasons.

a question of trust

Ask your ML-powered kitchen robot to bake you a cake, and you might tolerate a little variability in the results. Ask your ML-powered Airbus A380 to land itself in a hurricane-force crosswind, and you want to be damn sure that what happens is what you expect to happen.

A situation where the command is issued to an inscrutable matrix of floating-point numbers, returning essentially unpredictable results, might be fine for games, art, or music, but it’s not going to fly in real life.

And this will remain true, no matter how powerful ML systems get.

People use contracts - manuals, operating procedures, agreements, instructions, specifications - to govern their interactions, so they can do business with less friction and more confidence. As artificial minds become more commonplace, we’ll need something similar to govern our interactions with them. And the more important ML systems become in our lives, the more important those contracts will become.

Programs, I propose - whatever language they’re expressed in - are those contracts. They specify, in minute detail, the operations that the user should expect the machine to perform in response to a command.

If we’re going to interact successfully with ever-more-powerful ML systems, we’re going to have to pay close attention to those programs, and understand them, using nothing but the power of our own tiny little noggins. Fail in that task, and even the most mind-blowing ML systems of the future will be useless to us.

The good news is that those programs are going to be written in a language we can all understand.

programs all the way down

Turns out, the world is full of such “natural language programs” - and not just text, either, but visual ones too; and they’ve been around for a while.

Just a few super-obvious examples:

recipes

Recipes have to combine precision with “rule-of-thumb” prescriptions; describe events happening in sequence and in parallel; specify resource management and preparation; talk about timeliness and termination conditions, use impenetrable jargon of uncertain and ancient origin, mix implementation detail with high-level intent - in fact, all the kind of things we do when writing real-time distributed software.

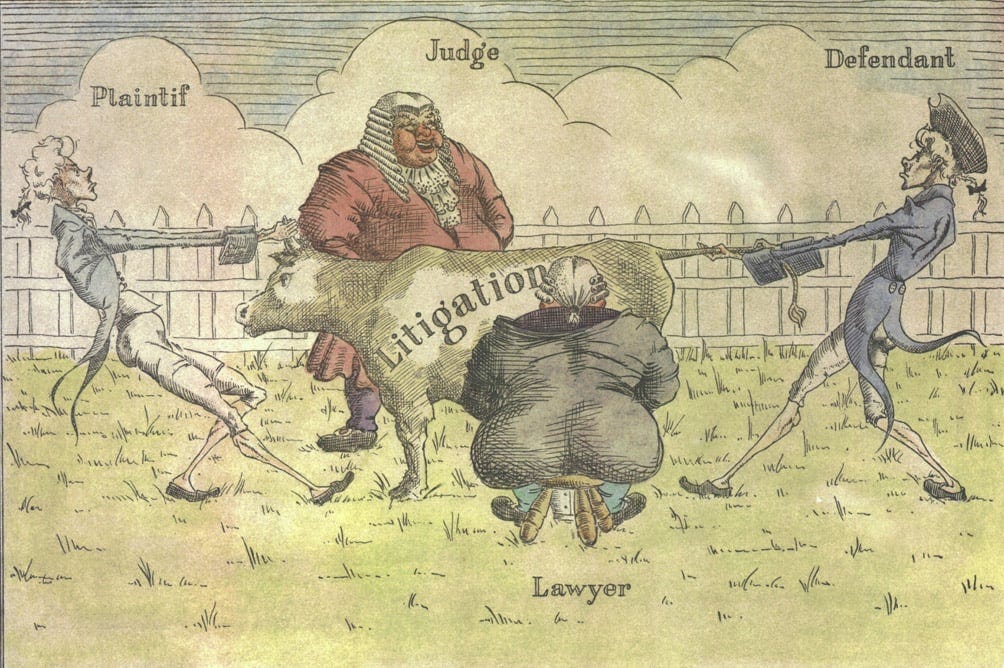

legal contracts

Legal contracts are attempts to specify, in exacting detail, expectations on the behaviour of both parties to an agreement, both the “happy path” of correct-as-intended execution, and the “exception conditions” that cover foreseeable mishaps.

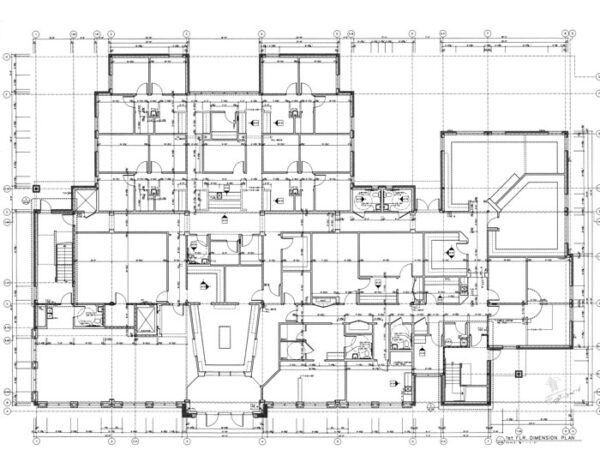

architecture and construction

Specifying not only what has to be built, but proving it’s fit for function, and then specifying all parts and configurations and orchestrating how everything comes together at the right time, clearly requires some pretty damn rigorous specification-fu.

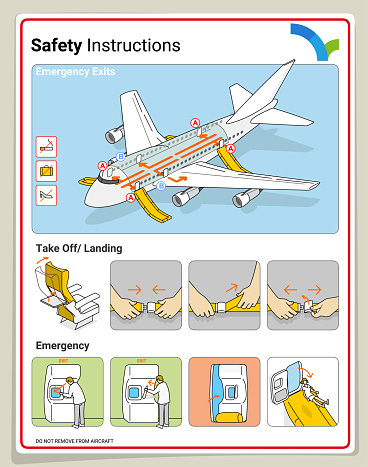

emergency instructions

I’m one of those people that always reads the instructions and counts the number of rows to the closest emergency exit. Better to know it and not need it, than need it and not know it.

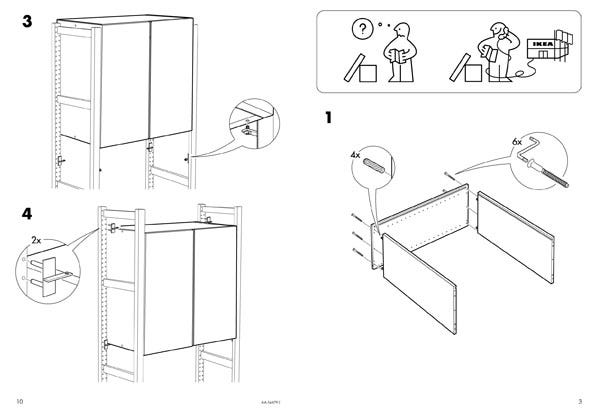

flat-pack furniture

As every true adult knows, you’re not truly an adult until you’ve spent an afternoon locked in mortal combat with a Rakkestad or a Songesand.

surgical teams

Turns out surgical teams that use the Surgical Safety Checklist halve their risk of complications compared to teams that don’t.

commercial aviation

Pilots, even experienced ones, use checklists to deal with both routine and exceptional circumstances.

the checklist manifesto

The Checklist Manifesto (Atul Gawande) is a book (and a writer) I continually recommend to people - a book with a seemingly banal subject, but one that is actually fascinating. Yes, I’m a nerd.

The thing about checklists, as the book elucidates, is that they impose a minimal “cognitive load” on the hapless human that has to follow them - in other words, they’re easy for people to deal with, especially in fast-developing situations with a high degree of stress.

They’re short and to the point; and there’s always a small number of items on each list (people can comfortably hold only up to seven items in their short term memory, apparently; in my experience, it’s more like three). They’re almost a perfect distillation of information transfer.

So I thought, well, what would a programming language / environment look like that was based on checklists?

The result was an experimental prototype called miso (make it so).

make it so

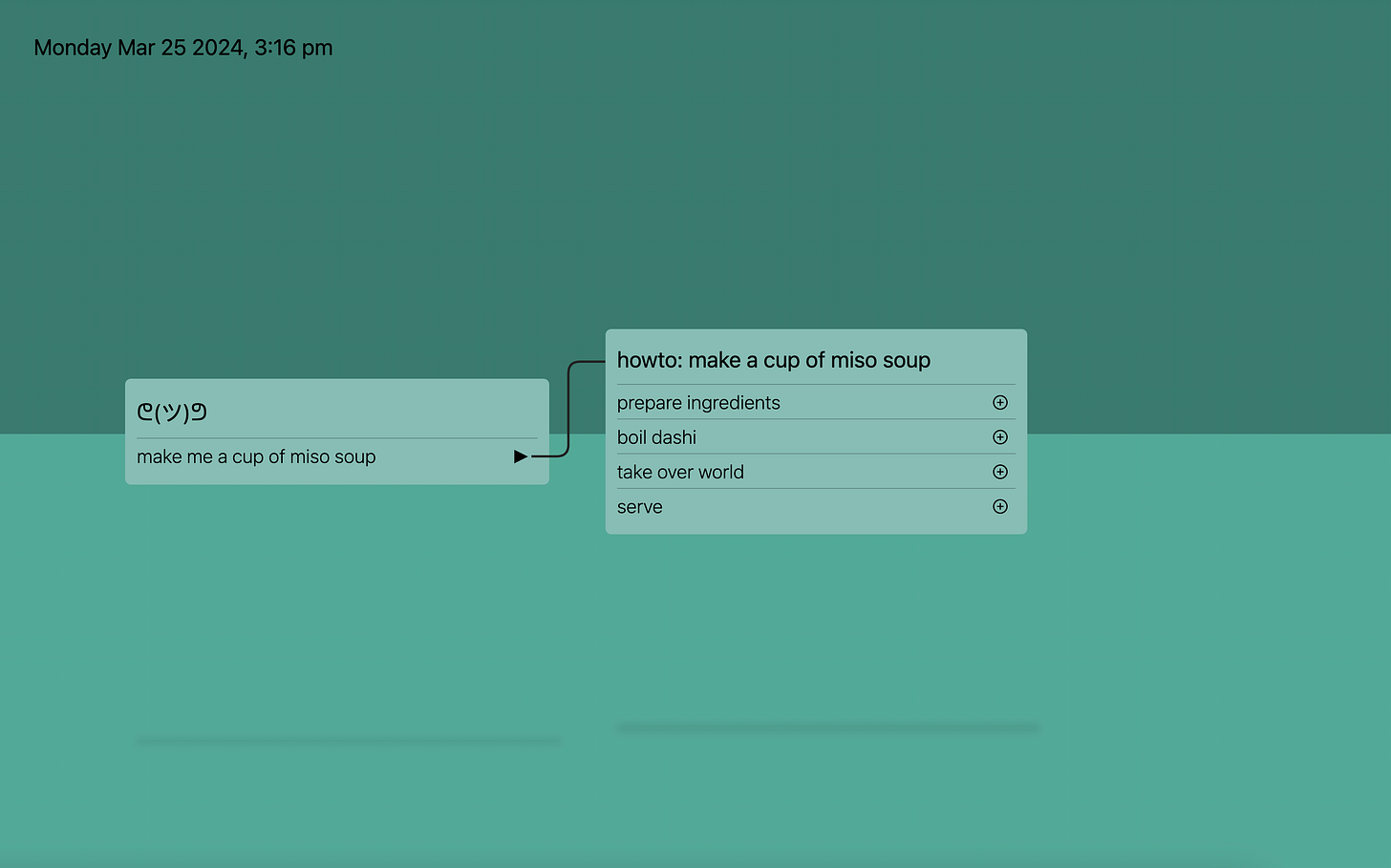

The idea of miso is simple: you start by typing in a one-line command, in natural language. In this imaginary example, miso is connected to a kitchen robot.

If miso has a checklist card that corresponds to your command, it’ll pull it up, check that it’s the right card to run, and run it. If not, it creates a little ‘⊕’ button to the right of your command line. If you click on it (or just hit enter) miso creates up a new, blank card, like this:

miso now goes away and searches the internet and does some ML magic, and fills in the checklist for you:

You can now look over miso’s suggestion, and edit it so that it’s more in line with your expectations:

You can now move on to filling in the details of the second-level tasks. Click the ‘⊕’ button to the right of ‘prepare ingredients’, for instance, and miso pops up a new card that it creates automatically:

Once again, you edit the card until you’re happy with it (removing, in this case, the reference to paperclips); and then proceed to expand the “boil dashi” command, as follows:

Anyway, you see how this goes. At each step, miso fills in what it thinks is the right sequence of sub-tasks for any task you want to complete, and you edit it until it’s right.

Now, of course, this “tree of tasks” might extend down for quite a few levels. Right down at the bottom of the tree will be cards that issue instructions (in this case) to the cooking robot, such as “pick up”, “put down”, “turn on hob”, and so on. So rather than manually approve everything all the way down, at some point you might just sigh and say “OK, miso, go ahead and fill in all the cards in the tree all the way down”.

so now what

With the help of ChatGPT4, I managed to get the miso prototype working tolerably well with a few days of hacking. I started with a few simple coding tasks, such as doing my expenses, something I hate doing manually.

I found that while this simplistic approach to “programming in natural language” could be made to work, there were quite a few wrinkles in practice.

In particular, it needed something akin to a “debugging” workflow: if, having got miso to auto-fill all the cards in the tree, you ask it to make you a cup of soup and (having served you the steaming bowl) it attacks you with a meat cleaver, you need some way to stop it, figure out why it’s acting up, and amend its instructions.

In general, though, it gradually became clear the checklist format was too restrictive. There were some problems that stubbornly resisted being neatly organised into task lists - I could write them down compactly in free-form, but expressed as checklists they mushroomed into over-complex trees of cards.

As I tried to get my head around this, I realised I had to try going in the opposite direction: I had to make something that could analyse actual existing code, and turn it into something that ordinary humans could work with.